Every year, the Association for Computing Machinery—the world's largest scientific and educational computing society—gathers to explore the future of computer interaction in a legendary conference called CHI. It's an amazing event, in which thousands of researchers, scientists, and futurists get together to push the boundaries of what it means to interact with machines.

It's a dizzying collision involving enough ideas about what the future of man and machine will look like to put the world's science-fiction authors out of their jobs for good. This year's CHI 2016 conference in San Jose was no exception—but among the hundreds of projects, here are eight that stood out.

Haptic Retargeting

The problem: In VR, objects might look real, but they don't feel real. In fact, for the most part, they don't feel like anything at all. So Microsoft Research came up with a system using a very limited number of physical props to take the place of a nearly infinite number of virtual objects.

They call it "haptic retargeting," and it works by tricking a VR user into thinking they aren't interacting with the same prop over and over again. It does this by skewing a user's virtual vision so the object they think they are reaching for in-game is the physical prop they already interacted with in meatspace.

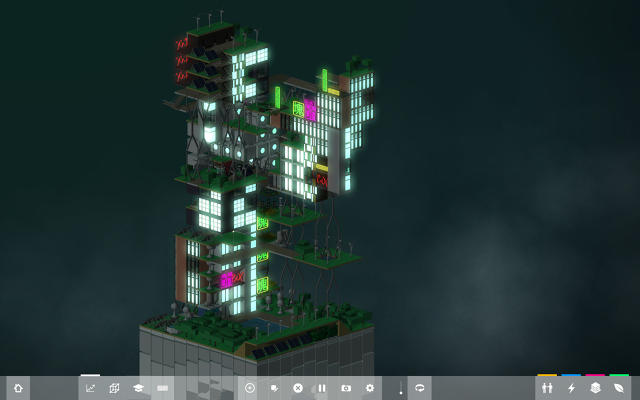

It's hard to put into words, so just watch the video above. In it, Microsoft Research shows how haptic retargeting could be used to trick a VR Minecraft player into thinking he's physically stacking innumerable blocks, when in reality he's just moving the same block around over and over again.

Dexta Haptic Gloves

Speaking of haptics, another company with a very different plan for allowing people to "feel" virtual reality is Dexta Robotics, which has come up with a set of exoskeleton-style gloves that lets VR push back.

Here's how they work. Upon entering virtual reality, Dexta's Dexmo Gloves simulate feedback by locking and unlocking finger joints when you try to touch digital objects with varying degrees of force. Using this relatively simple technique, the gloves can simulate haptic sensations such as hardness, springiness, softness, and more.

As Dexta Robotics explains: "How will this force feedback technology affect your VR experience? When inside the virtual environment, you can feel the difference between elastic and rigid virtual objects. You'll be able to hold a gun and feel a realistic 'clicky' level of resistance from the trigger. More subtly, you'll be able to pick up a virtual object and discern by touch whether it's a rubber ball or a rock."

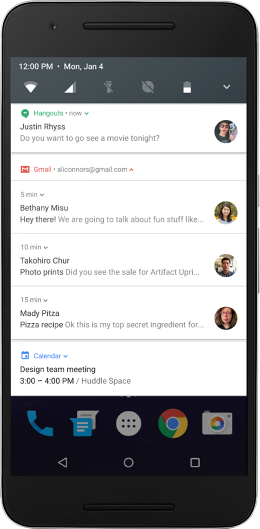

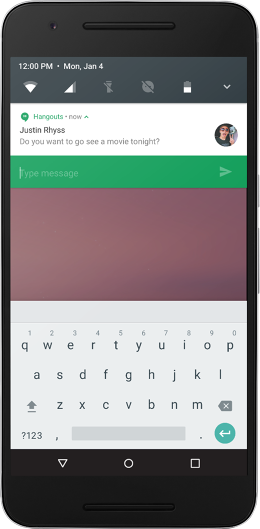

Pre-Touch

What if your smartphone could read your mind? That's what Microsoft Research is trying to do with "pre-touch sensing." It showed off a new type of smartphone that can detect how it's being gripped, and also detect when a finger is approaching the screen. This could open up some amazing new UI possibilities in mobile by improving the precision of tapping on small on-screen elements, and dynamically adjusting what on-screen interface a user sees according to how they're holding their device—or if a finger is approaching the screen. For more detail, read our full article on the new touchscreen here.

PaperID

What if paper could be just as interactive as a touchscreen? Researchers from the University of Washington, Disney Research, and Carnegie Mellon University have figured out how to do that, giving a piece of paper the ability to sense its surroundings and respond to gesture commands, as well as connect to the Internet of Things.

It's called PaperID, and it uses a printable antenna. The possibilities are fascinating: Imagine a physical book that is linked to your Kindle e-book, so that turning a page in the real world also turns the page on your e-reader. Or imagine a page of sheet music that can detect the motion of a conductor's wand being waved over it.

SkinTrack

A smartwatch screen just isn't big enough to allow many interactions. You have room to tap or swipe the screen, but that's pretty much it. SkinTrack is a new technology developed by the Human-Computer Interaction Institute's Future Interfaces Group, which expands your smartwatch's "touchscreen" over your entire hand and arm—just by wearing a specially designed ring.

Imagine using your palm to make a call on your Apple Watch by hovering a finger over your hand, acting as a cursor on a dial pad. SkinTrack could even be used to allow you to play more sophisticated video games on your wearable, by enabling a far broader library of gestures to control what's happening on that postage stamp-sized screen. Find out more about SkinTrack here.

Materiable

Materiable is the latest incarnation of Inform, a physical interface of moving "pixels" developed by MIT's Tangible Media Group in 2013. Materiable gives this existing Inform display the ability to mimic the tactile qualities of real-world materials, like rubber, water, sand, and more. Depending on the settings, flicking the surface of an Inform might make all of its pixels ripple, or quiver like jelly, or even bounce like a rubber ball. It's all accomplished by giving each individual Inform pixel its own ability to detect pressure, and then respond with simulated physics. It's like a big block of shape-shifting digital clay, which can be used in a variety of mind-blowing ways by designers, medical students, even seizmologists.

For more details, you can read more about Materiable here.

Holoflex

Remember that bendable screen Co.Design covered a few months ago? Holoflex is the next generation of that screen: a flexible smartphone that you bend to interact with. What's groundbreaking about the Holoflex, though, is that its display is truly holographic. Two people viewing a 3-D teacup on the display would both see it from the correct perspective, regardless of where they were positioned in relation to the screen.

The Holoflex is capable of achieving this neat trick by projecting 12-pixel-wide circular "blocks" through more than 16,000 fisheye lenses. It's really low resolution right now (160 x 104—less than the original Apple II), but give this technology five years, and we might all be walking around with holographic iPhones. Read more information about the Holoflex here.

SparseLight

Augmented reality headsets like Microsoft's HoloLens allow wearers to see the physical and the digital at the same time, but the lenses they depend on have such small field of view that it's easy for the effect to be ruined. The same is true in VR, where the scene you're viewing often looks like it's at the end of a long tunnel.

Microsoft's been thinking about this problem, and SparseLight is its solution. The idea is that you can augment the field of view in head-mounted displays by putting cheap grids of LEDs in the peripheral of a user's vision. Because humans' peripheral vision is so much fuzzier than what we're directly looking at, these LEDs essentially just match an object's color and brightness—and trick our brains into thinking we're seeing the whole thing.

SparseLight technology can be applied to both virtual reality headsets and augmented reality headsets to increase immersion, and Microsoft Research even reckons it can help cut down on some of the motion sickness problems users experience when wearing these headsets too. Read more about SparseLight here.